Learning from DevLearn

I just got back from DevLearn, and I am still processing how many practical, use-tomorrow ideas I walked away with. Below are the sessions that impacted me the most and the takeaways I am bringing back to my work.

1. Measurement Made Easier

Speaker: Alaina Szlachta

Solves for: How can we measure the impact of learning before business results show up?

Alaina’s core idea:

Build a Chain of Evidence during needs assessment.

If X happens, then Y happens, which leads to Z(s), which leads to Z(l).

- X = the learning initiative

- Y = change in capability

- Z(s) = short-term outcome

- Z(l) = long-term business goal

We often start with Z(l) because that is what leaders care about (for example, increase efficiency by 10 percent using AI).

But the chain forces us to define:

What behavior must change first?

What short-term signals will prove we are on the right track?

What data do we need at each stage?

Two quick examples:

| Goal (Zl) | Short Term (Zs) | Capability Shift (Y) | Learning Focus (X) |

|---|---|---|---|

| Improve efficiency by 10 percent using AI | Employees try using AI tools | Employees want to use AI | Teach AI skills and reasons to adopt |

| Build an informed AI strategy | Leaders define usage guidelines | Leaders understand AI safely | Teach AI, ethics, implementation |

Big takeaway:

We do not have to wait for business results. We can measure belief shifts, behavior shifts, and early indicators along the way.

2. Power Up Scenario Based Learning with Avatars and Storytelling

Speaker: Dena Byrne-Moss

Solves for: Making learning stick

“You do not always need a story, but you always need a narrative.”

Key insight:

Retention increases dramatically as you move from plain text to storytelling to interactive scenarios with avatars.

- Plain text: roughly 20 percent retention

- Storytelling: roughly 70 percent

- Scenario with avatar: roughly 90 percent

The 4 Part Scenario Arc

- Hook: a realistic problem

- Conflict: a decision point

- Consequence: the outcome of the choice

- Reflection: what was learned

Why Avatars Work

- Create emotional connection and social presence

- Make compliance and safety training feel human

- Enable branching dialogue and coaching

Design formula:

Real problem, relatable characters, meaningful choices, visible consequences, reflection

Example scenarios from the session:

- Do you skip the final review to save time?

- Do you report a hazard at the end of your shift?

Outcomes shifted based on learner decisions, such as trust, safety, teamwork, or injury.

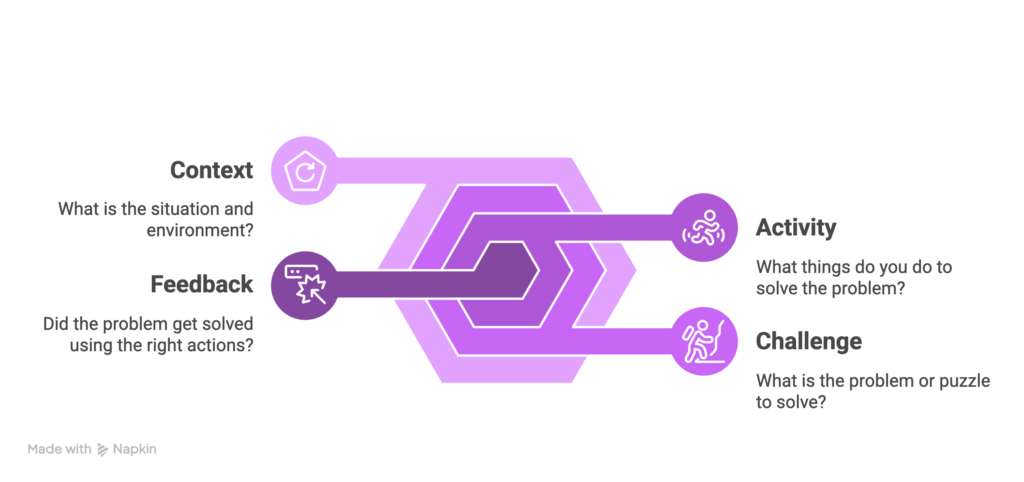

3. Escape Room Tactics for Learning

Speakers: Alan Orr and James Black

Solves for: Creating urgency and motivation

Their message was simple.

Give learners a high stakes, time boxed challenge with a reward.

This does not require full gamification. It only requires a scenario where success matters, where time is limited, and where the payoff is meaningful.

For example:

Unlock the system before it goes offline.

Stop the product recall in 20 minutes.

Reward: recognition, unlockable resources, competition.

Final Reflection

Across all three sessions, one theme stood out.

Learning sticks when it feels meaningful and measurable.

Whether it is:

- A measurable chain of outcomes

- Scenarios with emotional consequences

- A time boxed challenge that creates urgency

It is all about designing for behavior change, not only knowledge transfer.